Projects

Differential Evolution Optimization of the Broken Wing Butterfly Option Strategy

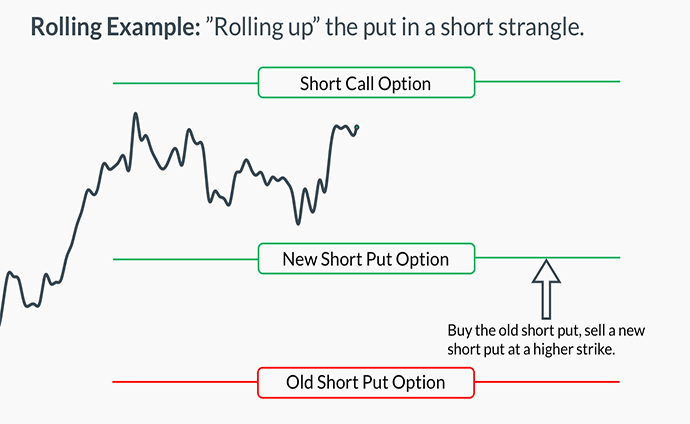

This research paper focuses on optimizing a trading strategy based on financial options. The option strategy is called the "put broken wing butterfly" This strategy can be used as an income generator where it is entered at regular time intervals and option time decay is the primary mechanism by which a profit is achieved. With the objective to maximize profits and minimize volatility over a defined time period, there are many parameters to choose from with wide ranges to seek this optimization. This search space is exponential and it is infeasible to optimiza with traditional methods or gradient-based methods. To optimize this problem, we used an evolutionary based optimization technique known as differential evolution. It as been found to have greater performance over other optimization techniques (such as genetic algorithms, simple evolutionary algorithms, particle swarm optimization) for a variety of functions featuring real values variables.

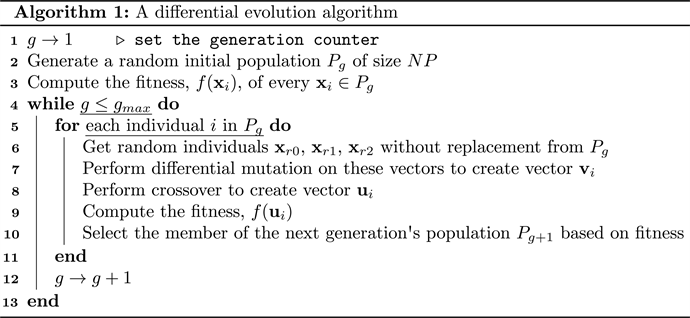

Pseudocode of the differential evolution algorithm that was adapted for this research

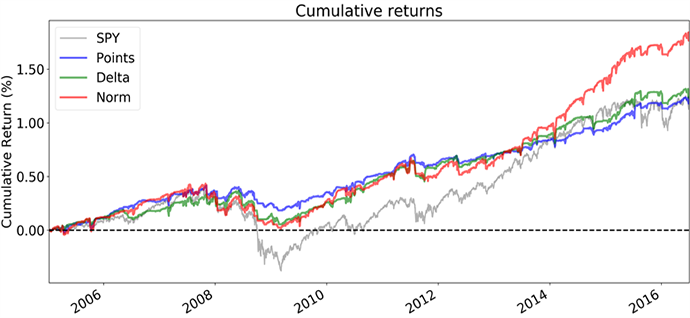

Cumulative returns of the strategy that was optimized. Noting how it performs better than the SPY benchmark

The main contributions of this work can be seen as twofold: 1) an optimized structure of a Broken Wing Butterfly option strategy together with trading parameters has been provided. This was obtained through the use of a differential evolution algorithm set up to operate on a fitness function which weighed the conflicting requirements of maximizing profit, achieving equity curve linearity and minimizing the maximum drawdown, and 2) the importance of using the normalized strike mapping method has been seen to be integral in achieving the optimized performance. The results obtained have been for the SPY exchange traded fund.

Using Python and Matlab, I implemented several machine learning algorithms to classify and predict data. They include neural networks, k-means, k nearest neighbors, SVM, and reinforcement learning.

Neural network training accuracy over time showing the difference between the different number of hidden layers. It classifies the mnist digits dataset. It can be seen that the higher the number of hidden layers, the better the training accuracy.

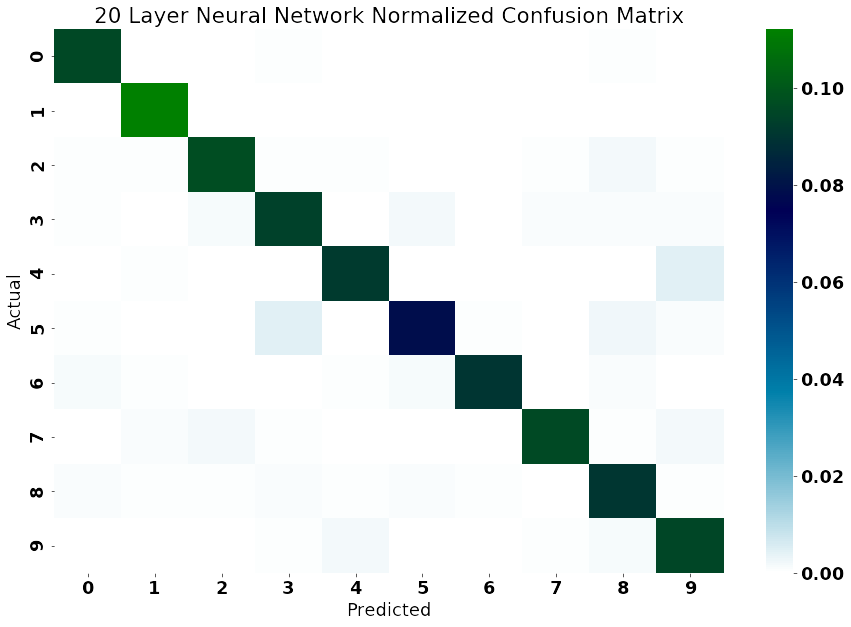

Normalized confusion matrix of the neural network classification using 20 hidden layers

Spam classification accuracy when using more and more features by using a support vector machine classifier and the spambase dataset. It classifies whether an email is spam or not based on 54 features. This animation compares the performance when using a random selection of n features vs a weight-based selection of n features. We can observe using top features is better when less features are used.

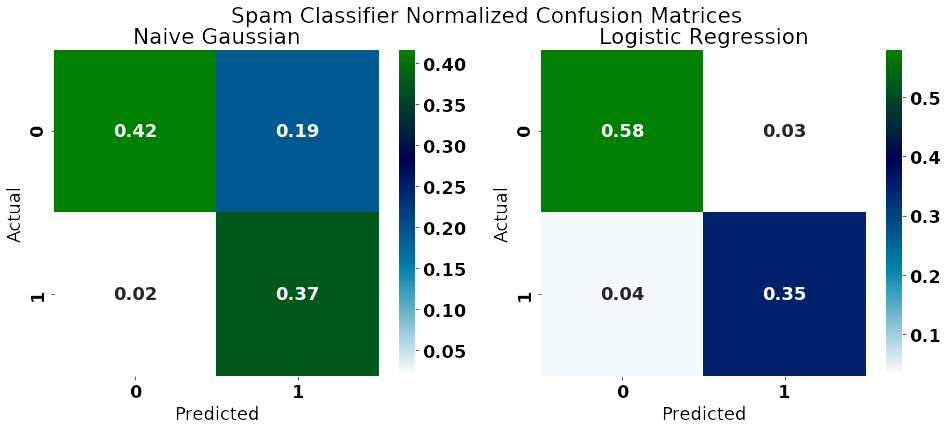

Normalized confusion matrix of the spam classification using a naive bayes and logistic regression classifiers.

To learn more about these projects, click the link below

Using Python, I implemented a Q-learning based agent that plays tic-tac-toe Using a deep Q-learning, I implemented an agent that finds optimal rolling and exit conditions for financial option trading strategies.re

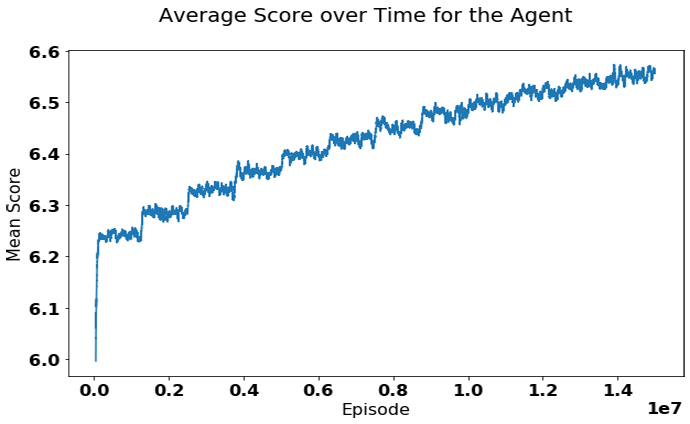

Average game score of the agent over time. The agent plays 10 tic-tac-toe games against a random opponent after every Q-table update. Maximum possible score is 10. An average score of 3.5 is considered a random opponent (1 point is awarded for a win, 0.5 for a tie, 0 for a loss).

An open ended question for option traders is "when to adjust or exit my position". Since rolling or exiting too early or too late can cause extra losses or missing out on potential gains. Since this a problem where the reward is not immediately known (as in a game of chess) a potential solution to this problem is to use reinforcement learning. A deep q-learning agent can be trained based on various stock and options data to maximize the expected future reward.

To play tic-tac-toe against my agent, press the link below

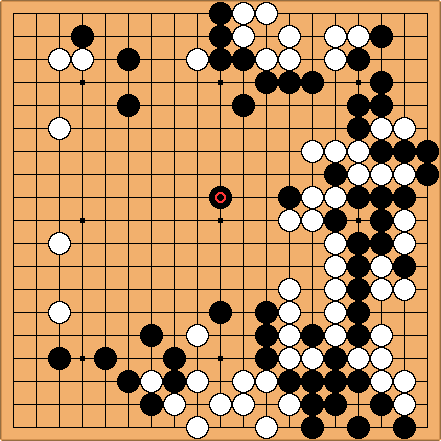

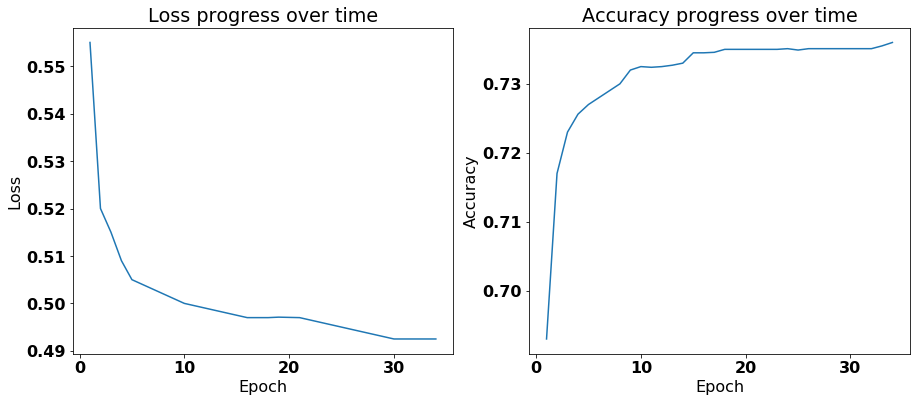

Using Python, I implemented a reward function using neural networks for a board game based on the combination of the games Othello and Go. To train the network, thousands of sample games were played and recorded. The state of the board was used as an input of the network, and the winner of the game as the label. After training, the network's reward function was used to play the same and look several steps into the future to decide the next moves. This player was able to beat the benchmark player 95% of the time.

This game is similar to the game of Go. There are two players, the state of the board is known to both players, each player takes one turn.

After just 35 epochs, the loss and accuracy increase enough for a player to beat the baseline 95% of the time.

Improvements can be done to the player by using game examples from stronger players, and implementing an alpha-beta pruning tree of the possible moves.

Using Ruby on Rails, I built several web apps for personal use to challenge myself and learn new frameworks. Used a material design theme, accessibility friendly design, UX, and PWA best design practices. Built from the ground up and deployed in Heroku using AWS for serving images. Using Rails’ built-in test environment, I designed tests and integration to verify all functionality. Latest deployed site receives over 200 weekly visitors

This webpage is a demonstration of a few PWA capabilities. It is capable of installing as an app in Android and iPhones. It is built with a mobile and offline first methodology. It caches the resources as needed for faster speeds. Built using Ruby on Rails and deployed on Heroku.

Link to site

This site was built with a mobile and offline first methodology since it is to be used in South America, where internet speeds are more limited. This site is used to showcase various home decoration items for sale. Built using Ruby on Rails and deployed on Heroku.

Link to site

This personal webpage was built to control the Hue smart light system in my house. Instead of using the Hue app (which can be slow and unresponsive) this web app is blazing fast and has similar functionality. It implements an endpoint to allow NFC tags to control the lights. Effectively allowing 'taps' around the house to activate its lights.

Demonstration of mobile-first capabilities.

Demonstration of mobile-first capabilities.

Demonstration of mobile-first capabilities.

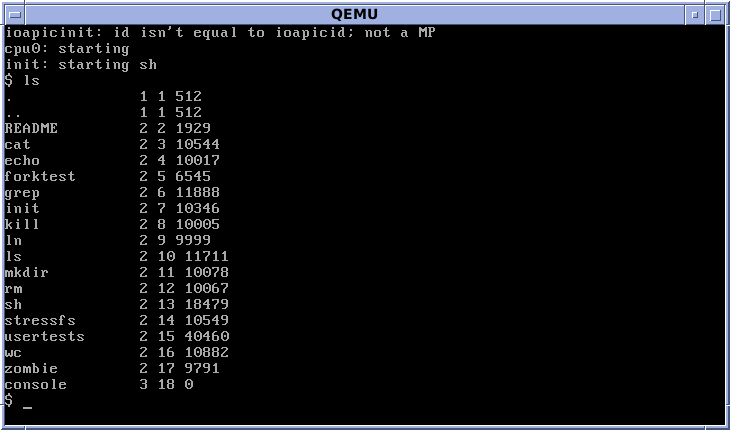

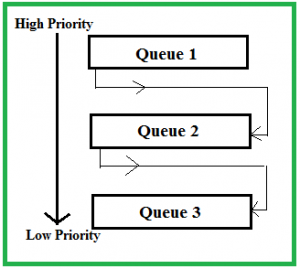

I modified the Xv6 operating system source code to learn more about OS internals. Using C, I added new system calls and a new file system protection scheme based on UNIX octal permissions. Modified the processes scheduling algorithm from FIFO to a multilevel feedback queue scheduling (MLFQ) approach.

From Wikipedia: xv6 is a modern reimplementation of Sixth Edition Unix in ANSI C for multiprocessor x86 and RISC-V systems. It was created for pedagogical purposes in MIT's Operating System Engineering course.

Multilevel feedback queue scheduling is an improvement over the simple "FIFO scheduling" in xv6. It changes priority of processes based on their run time that increases "fairness" into the process scheduler in addition to speed improvements.

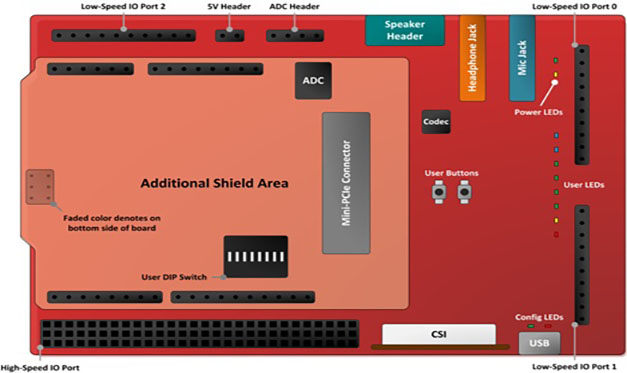

Worked in a team that developed an application for Intel's IoT Galileo module Used the CSI-2 protocol for image capturing, and the generalized Precision Time Protocol (802.1as) The system ran in the embedded Linux distribution Yocto. Implemented with Verilog and C

IoT Shield for the Intel Galileo Development Board. It contains an FPGA for RTL development. Image extraction was written in Verilog. Image encoding was written in C.

The objective was to emulate precision time photography as seen in the Matrix movie. What they acheived with hundreds of cameras and hundreds of post-processing time, we wanted to achieve with a few IoT shields and minimal post-processing with the help of the 802.1AS Time Synchronization protocol.